5 min read

Coming Up - MIPI Sessions at embedded world North America 2025

![]() MIPI Alliance

:

14 May, 2025

MIPI Alliance

:

14 May, 2025

- Resources

- Conference Presentations

4-6 November 2025

Anaheim, Calif., USA

The second edition of the embedded world North America Exhibition & Conference will take place 4-6 November 2025, in Anaheim, Calif. This premier event for the embedded systems industry connects researchers, developers, industry and academia from all disciplines of the embedded world.

MIPI specifications will be the focus of six sessions within two tracks, MIPI I3C® Serial Bus and MIPI for Embedded Vision, on Thursday, 6 November. Read more below about the MIPI sessions, and register to join us in Anaheim.

MIPI members can use the registration code "EWNAMIPI" to receive either a free expo hall pass (worth $55) or a $45 discount on a full conference pass.

MIPI I3C Serial Bus

6 November 2025

An Introduction to MIPI I3C: The Next-Generation Serial Bus

Michele Scarlatella, MIPI Alliance

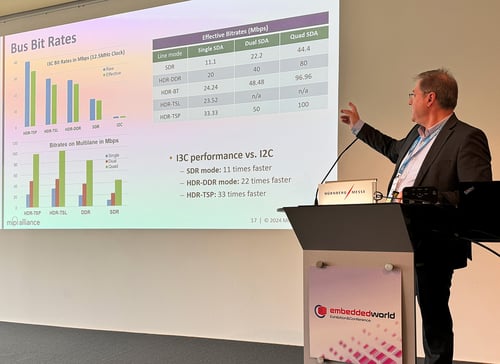

MIPI I3C (and the publicly available MIPI I3C Basic™) is a versatile, two-wire serial bus specification designed to connect peripherals to microcontrollers with high efficiency. As the evolutionary successor to I2C, MIPI I3C integrates the strengths of I2C, SPI, and UART, offering a powerful upgrade path with higher performance, lower power consumption, and

reduced pin count.

This presentation provides a practical introduction to MIPI I3C, highlighting its value across a range of applications—from sensor control and memory sideband channels to server management, always-on imaging, debug communications, touchscreen interfaces, and power management.

This session should equip you with a solid understanding of how MIPI I3C can enhance system performance and simplify integration, including:

- The distinction between MIPI I3C and I3C Basic

- I3C bus configurations and device roles (primary/secondary controllers, I2C targets, bridging and routing devices)

- High-throughput capabilities, including double data rate and multi-lane capability

- Main bus transactions and command codes

- Use of dynamic addressing for simpler system design and streamlined driver development

MIPI I3C: The Digital Protocol You Didn’t Know You Needed

Jonathan Georgino, Binho, Co-Vice Chair of the MIPI I3C Working Group

The MIPI I3C interface solves longstanding challenges for embedded systems designers. Beyond delivering higher speeds, it is feature-rich, uses fewer pins, consumes less power and is straightforward to adopt. With built-in support for dynamic addressing, in-band interrupts, broadcast communication and intelligent bus management, I3C provides capabilities that once required the implementation of time-consuming vendor-specific hacks and workarounds.

This session will present real-world examples spanning from consumer products such as AR/VR systems and camera-based smart home products, to enterprise/datacenter memory and storage devices, where designers have used I3C to simplify board layouts, reduce interrupt lines and consolidate communication paths — all while meeting growing performance and reliability demands. It will explain how I3C serves as a foundational technology for AI-enabled, battery-powered IoT and wearable devices, enabling experiences once thought impractical on resource- and power-constrained platforms.

The session will conclude with an overview of silicon availability, development tools, and an ecosystem readiness assessment — underscoring that I3C is available to use today and is already improving embedded system designs.

Enabling Next-Generation Embedded Vision Systems with MIPI I3C

Dominique Barbier, STMicroeletronics

Recent advances in AI and semiconductors have driven a rapid growth of CSI-based embedded vision systems, from delivery robots to mobile barcode scanners. Yet, optimization in size, weight, power, and data is still insufficient for some smart vision applications or for simply reaching long enough battery life in various products.

In this presentation, we will explore how MIPI I3C offers a practical solution to meet the requirements of next-generation embedded vision systems. We will begin with an overview of the latest innovations in imaging and processing solutions.

We will delve in the key features, practical use cases, and insights on leveraging MIPI I3C technology implementing innovative features such as auto-wake up and event imaging.

The session will conclude with a forward-looking perspective on future innovations, expanding the capabilities and efficiency of embedded vision systems.

MIPI for Embedded Vision

6 November 2025

Beyond Image Data: Overcoming the Unique Challenges of Integrating Direct Time-of-Flight (dTOF) Sensors with MIPI CSI Interfaces

Karthick Kumaran Ayyalleuseshagiri Viswanathan, META

Direct Time-of-Flight (dTOF) technology has emerged as a promising solution for depth sensing in AR/VR applications, offering high accuracy and long-range capabilities. However, unlike standard image sensors, dTOF sensors stream data over MIPI CSI interfaces that are not image data directly, making them quite different from traditional image sensors.

This talk will explore the unique challenges posed by this difference, including complex register settings, high data rates, C-PHY sensitivity, and power management issues. We will share our experiences and insights gained from tackling these challenges, providing valuable guidance for developers and engineers working on similar projects. By exploring the intricacies of dTOF sensor integration, we aim to facilitate the development of more sophisticated and accurate depth sensing systems for AR/VR applications.

FPGA Sensor Fusion for Edge AI Applications with Example MIPI Interfaces

Satheesh Chellappan, Lattice Semiconductor

FPGAs have long been the preferred solution for bridging disparate interfaces in complex systems. With the rapid growth of sensor deployments in industrial and automotive domains—driven by increasing AI workloads—there is a critical need for scalable and reusable sensor fusion solutions at the edge.

This paper presents a modular FPGA-based hardware design framework combined with parameterized firmware and host drivers to reduce development time and enhance system flexibility. We outline the key components required for implementing efficient sensor fusion using FPGAs in edge AI system architectures. The discussion includes a breakdown of essential hardware modules for interfacing with diverse sensors, including MIPI CSI-2, along with host interfaces for control and data streaming. We also detail firmware considerations for managing sensor data transport and preprocessing at the edge processor, as well as host SW drivers responsible for handling fused sensor data streams. Additional focus is given to protocols enabling dynamic discovery and enumeration of edge sensors, and APIs for sensor configuration, event management, and debugging. A practical example flow is provided, demonstrating I3C as the host control interface alongside 10G Ethernet for high-speed streaming and / or control. This structured approach enables scalable and efficient sensor fusion solutions for evolving edge AI applications.

Confronting the Connectivity Challenge in High-Resolution Disposable Endoscopy

Matt Snell, Valens Semiconductor

The healthcare industry has been steadily moving toward single-use endoscopes, driven by the need to reduce infection risk and simplify clinical workflows. Regulatory agencies like the FDA have encouraged this shift in light of repeated incidents involving contaminated reusable scopes. While single-use devices eliminate many of the challenges tied to cleaning and sterilization, the transition has raised new technical hurdles - particularly when it comes to image quality. Modern procedures increasingly rely on high-resolution imaging to support accurate diagnostics and real-time decision-making. However, achieving that level of performance in a disposable device is not straightforward. These endoscopes must be compact, cost effective, and power-efficient, leaving little room for the types of components traditionally used in high-bandwidth video systems.

A major barrier has been the lack of connectivity solutions that can maintain signal integrity under these constraints. Electromagnetic interference from electrosurgical tools is a particular problem in operating rooms, often degrading video quality or disrupting transmission altogether. Many conventional SerDes architectures are either too bulky, too power-hungry, or too sensitive to interference to be used effectively in this context. Emerging standards like MIPI A-PHY offer a potential path forward.